The improvement efforts of colleges and universities are most promising when they are based on evidence of the performance and experience of their students inside and outside the classroom. In addition, institutions’ evidence of their achievements and of how they use data to inform improvement efforts is expected in their responses to heightened demands for accountability and multiple pressures to increase student persistence and completion, support diversity, and ensure high-quality learning for all students.

The National Survey of Student Engagement (NSSE) provides institutions with data and reports about critical dimensions of educational quality. Whether a campus is interested in assessing the amount of time and effort students put into their studies or the extent to which students utilize learning opportunities on campus, NSSE provides colleges and universities with diagnostic, actionable information that can inform efforts to improve the experience and outcomes of undergraduate education.

NSSE results can inform and structure conversations in efforts to enhance student learning and success across campus offices and projects including enrollment management and retention, marketing and communications, faculty development, learning support, and student housing. As an assessment instrument, NSSE can be used to identify both areas of strength as well as opportunities for growth to help make learning and the campus environment more cohesive with student needs and expectations.

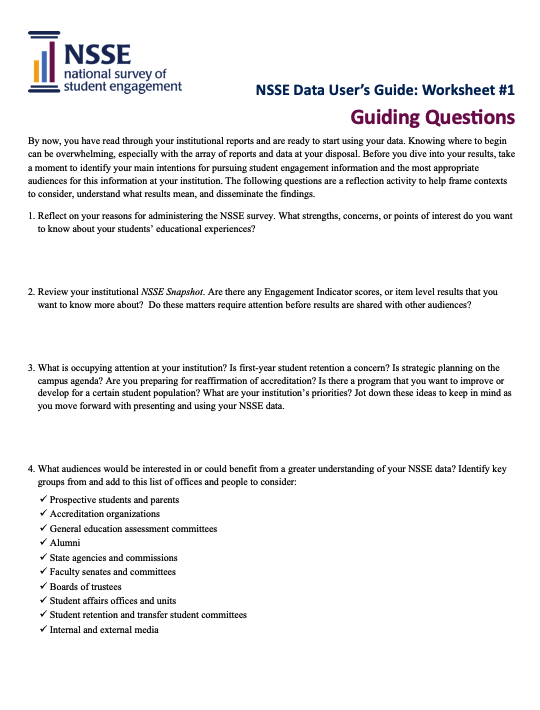

Making NSSE data accessible and useful is key to engaging various campus audiences in identifying and analyzing institutional and program shortcomings and for developing targeted strategies for continuous improvement—critical steps in institutional growth and change. How can institutions determine who is interested in NSSE results? What are the best ways to connect campus groups and committees with this information? What audiences could use this information in responding to campus challenges and opportunities?