Often lauded as transformational educational experiences, High-Impact Practices (HIPS) play a key role in the undergraduate experience. Yet the success of these practices depends in part on implementation that may vary between programs and institutions. The eagerness to label practices high-impact, or to focus exclusively on participation in these activities, may have distracted educators and administrators from ensuring these activities provide the particular and rich kind of experience that makes them so beneficial (Kuh & Kinzie, 2018).

With support from Lumina Foundation, we set out to assess the quality of HIPs, surveying over 20,000 U.S. college students about their experiences at close to five dozen institutions in the spring of 2019. The information presented here is elaborated in our full report (Kinzie et al., 2020).

What makes it "High-Impact?"

Kuh and O'Donnell (2013) identified eight key ingredients that make for high-impact educational activities that are distinct from the typical classroom experience. To be considered a HIP, an activity must involve:

- Performance expectations set at appropriately high levels

- Significant investment of time and effort by students over an extended period of time

- Interactions with faculty and peers about substantive matters

- Frequent, timely, and constructive feedback

- Experiences with diversity wherein students are exposed to and must contend with unfamiliar people and circumstances

- Periodic, structured opportunities to reflect and integrate learning

- Opportunities to discover relevance of learning through real-world applications

- Public demonstration of competence.

Using these as a guide, we designed a survey that mapped these eight elements to questions about the HIP to assess the quality of the experience. For this set of questions, we considered seven practices - first-year seminars, service-learning, learning communities, undergraduate research, internships or teaching experiences or the like, study abroad, and culmination senior experiences - although there are others. The NSSE asks students about their participation in all of these, except first-year seminars.

Once data were collected, we set thresholds for each of the above elements to gauge whether the experience met expectations. For instance, for a HIP to require a sufficiently "significant investment of time and effort" we proposed that students must report spending at least "more time" on the activity than their typical learning experiences. However, some HIPs place greater weight on certain elements than others. We reviewed relevant scholarship on HIPs, judging which of those elements seemed to be emphasized more than others, so that we could share results that were more attenuated to the particular experiences, and more valuable to practitioners and educators.

The chart below illustrates the share of students within each HIP that met our criteria for "high-quality," with larger proportions shaded darker. Cells with the percentage shown were those elements strongly emphasized in the literature, and those without were emphasized, but not strongly. Cells are shaded white where the elements are not emphasized in the literature.

For example, having substantive interactions with faculty and peers is a crucial part of what makes undergraduate research a valuable experience. About two in three students who participated in undergraduate research met our quality criteria for this element, meaning they met frequently with faculty, and those meetings focused on what was being learned in the experience. An even greater share (88%) met our quality criteria for having rich feedback from their faculty or supervisor, but our review of the literature did not identify this as a crucial component. Only 20% of students in undergraduate research said they frequently interacted with people different from themselves or often found themselves in unfamiliar circumstances - our criteria for high-quality experiences, which was not a focus of existing research (white cell).

Between 40% to 70% of students across HIPs met our criteria for high-quality experiences, with some exceptions. A fair number of students were in HIPs that demanded significant time and effort, where they received timely feedback from faculty, where they reflected on what they had been learning in their experiences, and could discover the relevance of their learning to the "real world." That few students had high-quality diversity experiences in first-year seminars and service-learning courses might alarm educators ("But my service-learning course really challenges my students!"), but the goal of this project was to assess quality, and provide useful diagnostic information about the practices.

Addressing Equity in HIPs

One of the goals of this project was to examine equity in the quality of HIPs - that is, do students of color or first-generation students report similar levels of quality in their HIPs as other students? While limited to students participating in HIPs, we hoped to identify where, if at all, equity gaps may occur.

The following plot describes the share of white students and underrepresented minority (URM) students (in this case, Black or African American, Hispanic or Latino, and Native American or Alaska Native) who met our criteria for high quality across the elements. While in many cases the proportions are similar, some noticeable differences exist. For instance, a slightly greater share of URM students in first-year seminars met our quality thresholds for receiving rich feedback and having substantive interactions with faculty and peers, but a slightly smaller share in internships and culminating senior experiences felt the experiences demanded significant time and effort.

Overall Quality in HIPs

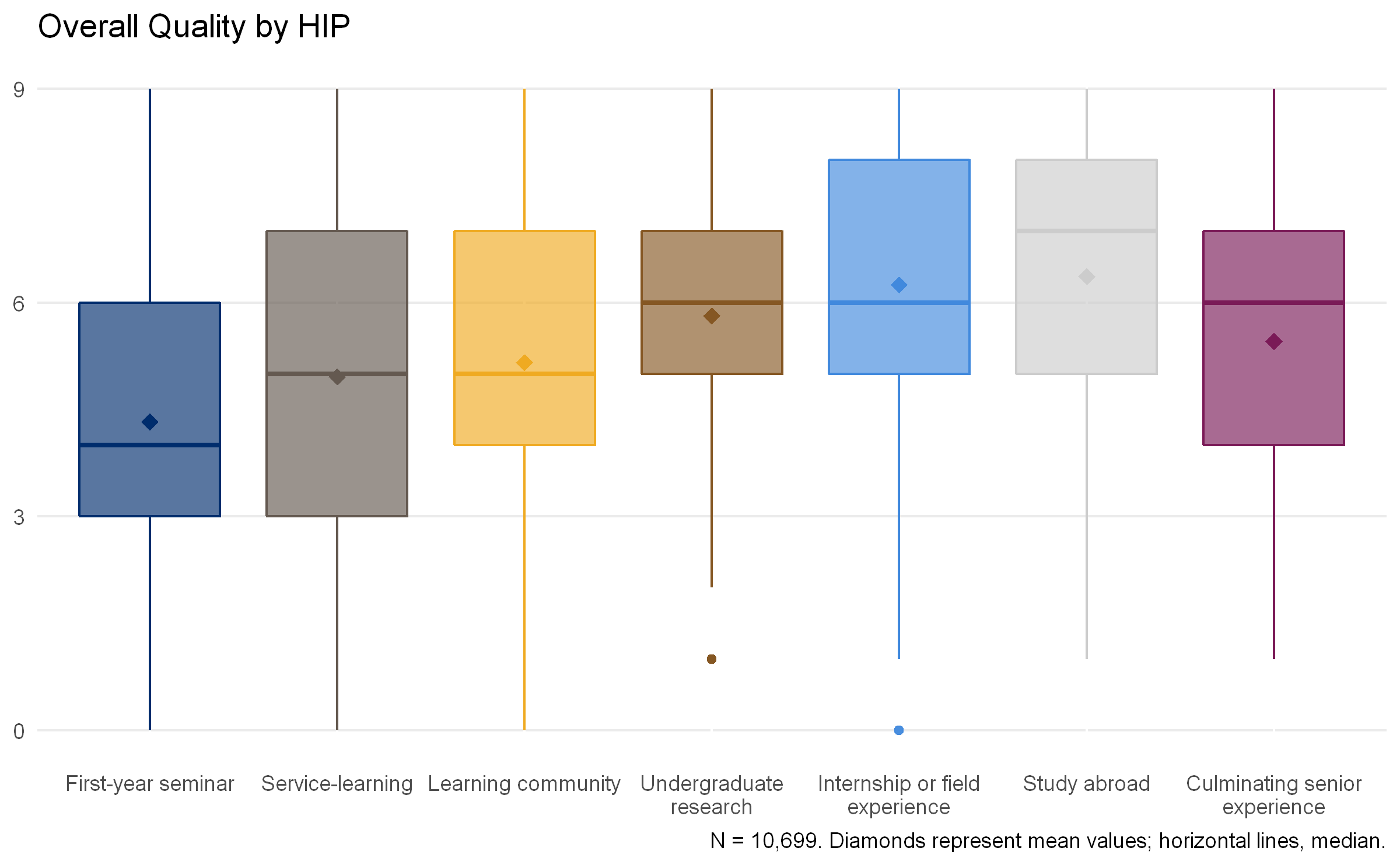

Though useful for its level of detail, it can be difficult to appreciate the picture of quality painted by these eight dimensions. That's why we developed a simpler metric of overall quality: the sum of the elements that were high quality, detailed in the box-and-whisker plot below. Across HIPs, students met our criteria for between four and seven of the nine elements on average. Students in study abroad and internships, teaching experiences, or the like, tended to make the cut for the greatest number of quality elements. Those who had done research with faculty had fairly consistent, and fairly high-quality, experiences, while there was a bit more variation in the quality of the experience among those in service-learning courses.

It is our hope that the survey, the literature matrix, and data shared with Lumina Foundation and the participating institutions can push educators and practitioners to move beyond "mere participation" and turn a critical, constructive eye toward understanding the qualities that make HIPs "high-impact."

See more findings and details in our full report.

Resources

Kinzie, J., McCormick, A. C., Gonyea, R. M., Dugan, B., & Silberstein, S. (July 2020). Assessing quality and equity in high-impact practices: Comprehensive report. Indiana University Center for Postsecondary Research. https://nsse.indiana.edu/research/special-projects/hip-quality/index.html

Kuh, G., & Kinzie, J. 2018, May 1. What really makes a 'high-impact' practice high impact? Inside Higher Ed. https://www.insidehighered.com/views/2018/05/01/kuh-and-kinzie-respond-essay-questioning-high-impact-practices-opinion

Kuh, G., & O'Donnell, K. 2013. Ensuring Quality & Taking High-Impact Practices to Scale. Washington, DC: Association of American College and Universities.